Speakers

-

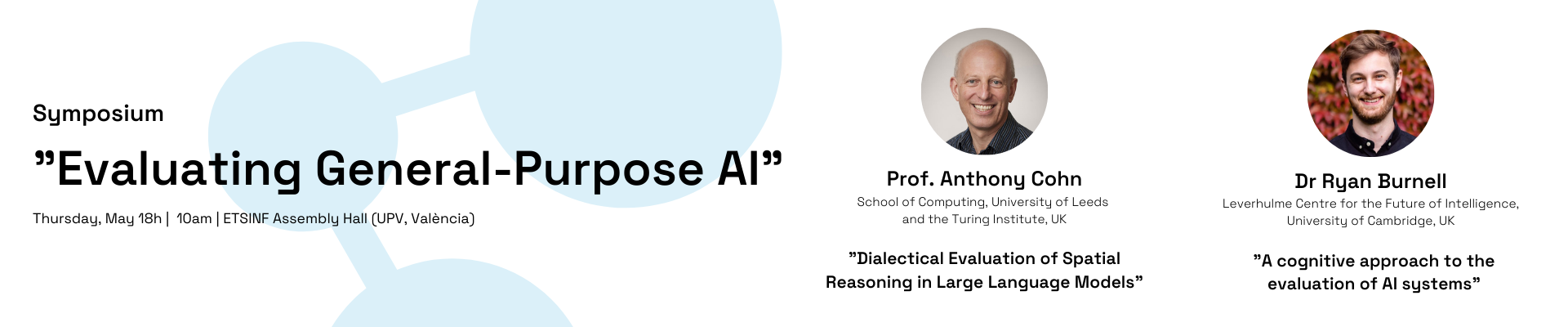

Prof. Anthony CohnSchool of Computing, University of Leeds and the Turing Institute, UK

Prof. Anthony CohnSchool of Computing, University of Leeds and the Turing Institute, UK -

Dr Ryan BurnellLeverhulme Centre for the Future of Intelligence, University of Cambridge, UK

Dr Ryan BurnellLeverhulme Centre for the Future of Intelligence, University of Cambridge, UK

Evaluating General-Purpose AI

[social_buttons facebook=”true” twitter=”true” linkedin=”true”]

Symposium on “Evaluating General-Purpose AI” featuring esteemed speakers:

- Professor Anthony Cohn from the School of Computing at the University of Leeds and the Turing Institute, UK

- Title: “Dialectical Evaluation of Spatial Reasoning in Large Language Models”

- Abstract: Many claims have been made about the abilities of Large Language models (LLMs). Here we investigate, through the use of a dialectical approach, to what extent they are able to reason correctly spatial information, in particular about commonsense spatial situations and about geo-spatial texts. Thus, rather than evaluating an LLM on a set of benchmark data and reporting aggregate statistics, we conduct a series of dialogues with such a system in order to find failures in reasoning and thus map the boundaries of the system. We conclude with some suggestions for future work both to improve the capabilities of language models and to systematise this kind of dialectical evaluation.

- Dr Ryan Burnell from the Leverhulme Centre for the Future of Intelligence at the University of Cambridge, UK. This event is organised and sponsored by VRAIN-UPV, ValGRAI, FLI, and TAILOR.

- Title: “A cognitive approach to the evaluation of AI systems”

- Abstract: The capabilities of AI systems are improving rapidly, and these systems are being deployed in increasingly complex and high-stakes contexts. As the importance of AI grows, so too does the need for robust evaluation. If we want to determine the extent to which systems are safe, effective, and unbiased, it is vital that we understand the cognitive capabilities of those systems. In this endeavour, psychological science has a lot to offer —scientists from cognitive, developmental, and comparative psychology have spent many decades developing theories and paradigms to understand the cognitive capabilities of adults, children, and animals. Drawing on these theories and paradigms, we are working to build a framework for evaluating the cognitive capabilities of AI systems that we hope can be used to better track and regulate AI progress. I will present an initial version of the framework and discuss the open questions and challenges of applying cognitive science to AI evaluation.