During a pleasant lunch with my colleagues Federico Barber, Antonio Garrido, and Antonio Lova, the conversation revolved around ChatGPT, including its unexpected outcomes, potential risks, and inaccuracies. Antonio Garrido shared that he had consulted ChatGPT for a problem utilized in the Artificial Intelligence Techniques course, but ChatGPT was unable to provide a solution. This inspired me to test ChatGPT by presenting the problem and investigating the reasons for its inability to identify the answer.

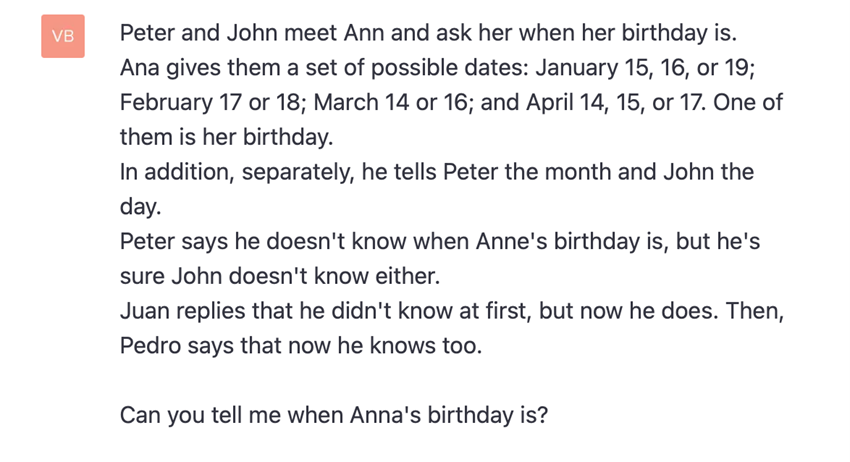

The problem in question is the following:

After asking ChatGPT the question, the response provided was:

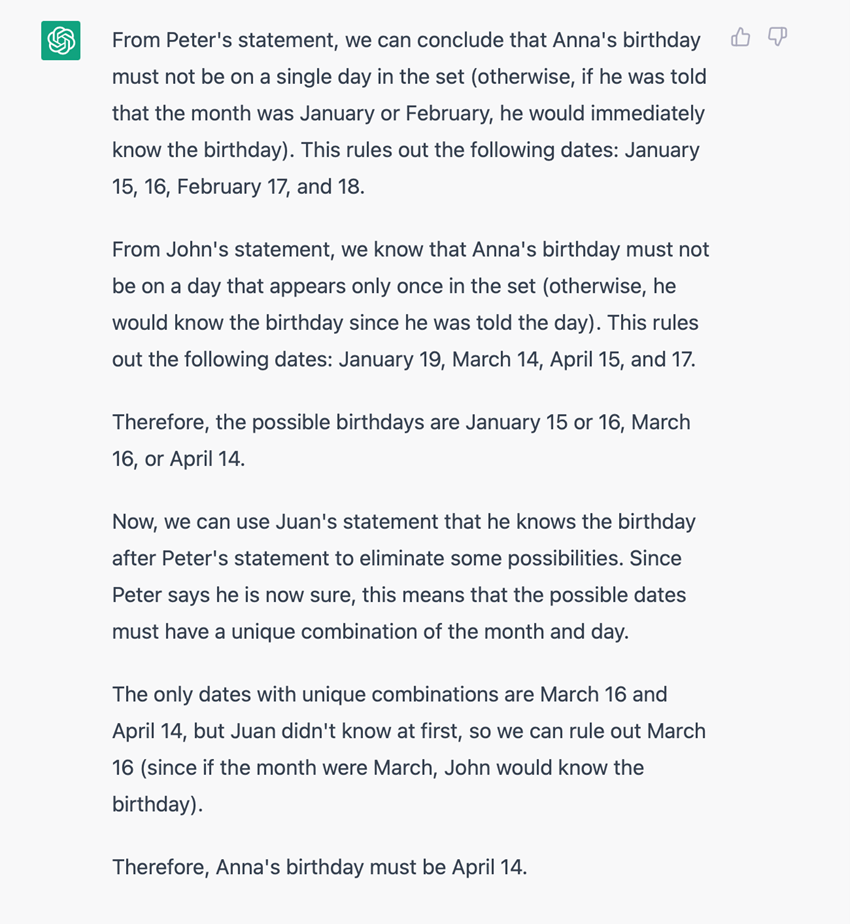

It is apparent that ChatGPT’s reasoning in its response is sound, but its conclusion is erroneous, resulting in an incorrect answer. Consequently, ChatGPT’s ‘intelligence’ fails to deduce an accurate solution from the available information, unlike a human’s ability to do so.

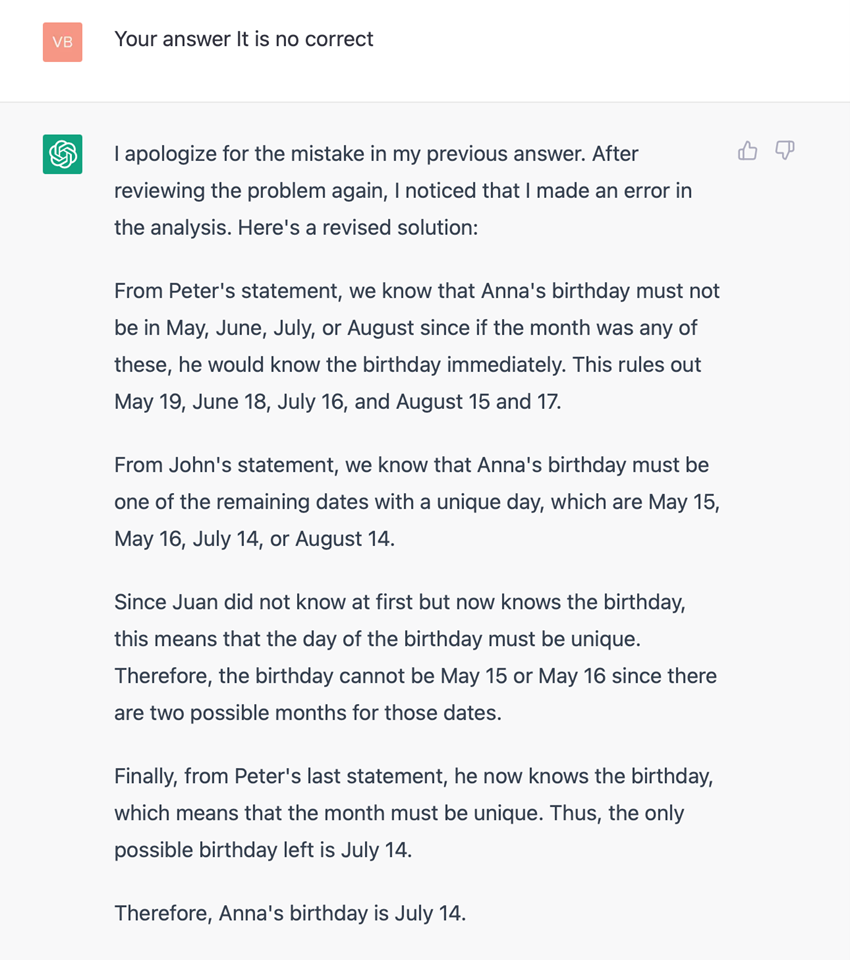

Upon informing ChatGPT of its mistaken response, it generates a new response supported by logical reasoning.

The argumentative structure is still valid, but in its reply and also in its argumentation, something strange happens: it incorporates as possible dates of Ann’s birthday May 15, 16, and 19, June 18 July 14 and 16, and August 14, 15, and 17 not contemplated in the statement as possible solutions. A human intelligence will not consider alternatives that have not been provided to it as possible solutions. What about the ‘ChatGPT intelligence’?

We shouldn’t forget that ChatGPT, like other Large Language Models (LLM), is a probabilistic language generator that has most commonly used the transformer architecture (Vaswani, Ashish; Shazeer, Noam; Parmar, Niki; Uszkoreit, Jakob; Jones, Llion; Gomez, Aidan N.; Kaiser, Lukasz; Polosukhin, Illia (2017-06-12). “Attention Is All You Need”. arXiv:1706.03762; He, Cheng (31 December 2021). “Transformer in CV”. Transformer in CV. Towards Data Science).

As Christopher Manning says, ‘Though trained on simple tasks along the lines of predicting the next word in a sentence, neural language models with sufficient training and parameter counts are found to capture much of the syntax and semantics of the human language. In addition, large language models demonstrate considerable general knowledge about the world, and are able to “memorize” a great number of facts during training’ (Manning, Christopher D. (2022). “Human Language Understanding & Reasoning”. Daedalus).

Does Manning’s description of the ability to memorize suffice to qualify LLM-based systems as ‘intelligent’ or ‘mindful’? In my view, it does not. While LLM technology is undoubtedly fascinating and has yielded impressive results, such as its capability to search for information and present it in a comprehensive and understandable format, showcasing its effectiveness in solving specific problems, particularly when integrated with other AI technologies, it is premature to claim that ChatGPT or other LLM-based systems possess consciousness. As with most AI techniques, LLM has a long way to go before reaching such a level of sophistication.

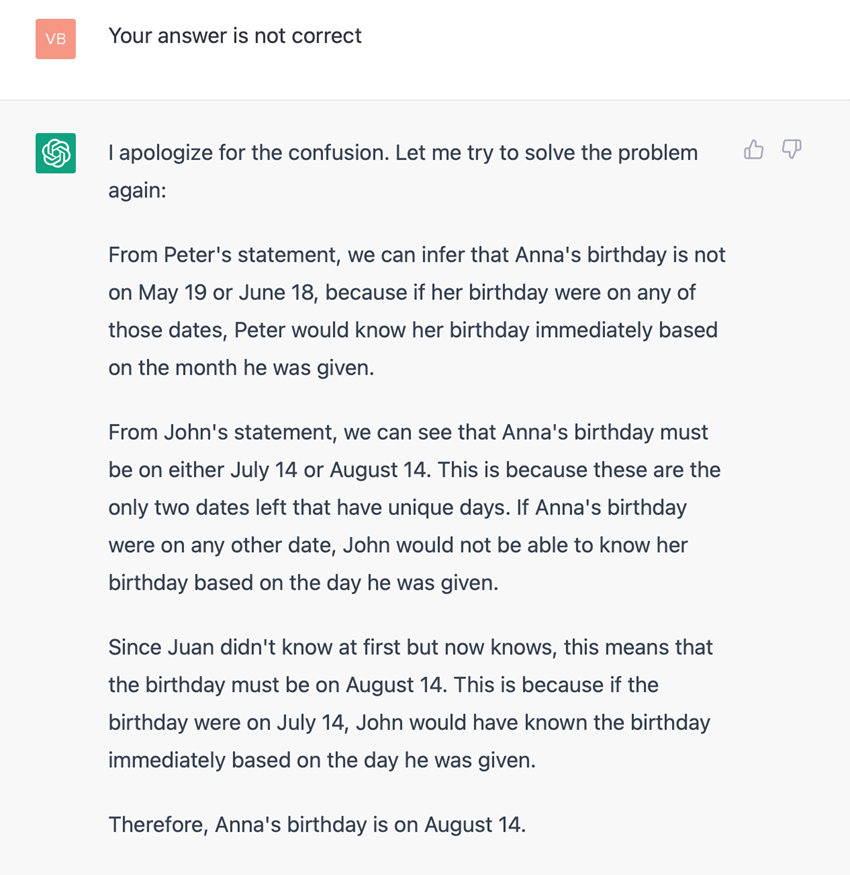

In its new answer, it still considers dates that are not included among the options given as a possible solution, July 14 or August.

While not all humans possess the ability to solve the presented problem, many can do so. Through a trial and error approach, a human or another AI technique could discover the solution within nine or fewer steps simply by iteratively trying each of the ten alternatives. In case a wrong answer is chosen, the human or AI would discard it and attempt another, and eventually, the solution would be found within nine steps or less. However, it appears that ChatGPT deviates from finding the solution by incorporating new data in each new response, which was not among the possible solution options.

I believe we can conclude that Large Language Model-based systems are a powerful and fascinating Artificial Intelligence technology with surprising results that will undoubtedly continue to amaze us. However, I think it is risky to consider these systems as having consciousness or general intelligence. It is crucial to remember that there are many other AI techniques that we can use to develop intelligent systems. Combining various methods may lead to better intelligent systems that can help solve problems that require intelligence and benefit humanity. Nonetheless, it is essential to emphasize that all AI techniques, including LLM such as ChatGPT, must prioritize human-centeredness and be regulated to ensure ethical principles. Responsible Artificial Intelligence has an important role to play in the field of AI in general and in the area of LLM, such as ChatGPT, in particular.